emmtrix

Code Vectorizer

(eCV)

The Tool to Vectorize Your Application

The vector units of upcoming microcontrollers promise to speed up the execution of data-parallel applications based on linear algebra by factors greater than 10. Programming such accelerators manually is challenging because it requires deep knowledge of their instruction set and microarchitecture. emmtrix Code Vectorizer (eCV) is your solution to simplify this task significantly.

See our tool in action. Contact us to request a demo or to set up a chat or meeting.

Introduction to Vectorization

In their next-generation of embedded, safety-certified MCUs, vendors like Infineon will incorporate so-called “vector units”, accelerators that can perform several similar operations on different data at the same time. This concept is known as single instruction, multiple data (SIMD). SIMD has been used for a long time in the desktop and server area and is now finding its way into safety-critical embedded systems. With this kind of hardware, applications that rely heavily on linear algebra, like sensor fusion or inferencing in AI systems, can be sped-up by a factor larger than ten.

From a programming point of view, these vector architectures share some of the same principles as GPUs in that they work on many data elements simultaneously and that data needs to be kept in local memory to keep the processing units busy. However, programming is still mostly done manually, which is time-consuming and error-prone, or by using pre-defined library functions, which limits the applications that can be accelerated.

For more information, we recommend our short vectorization series, consisting of three parts:

emmtrix Vectorization Workflow

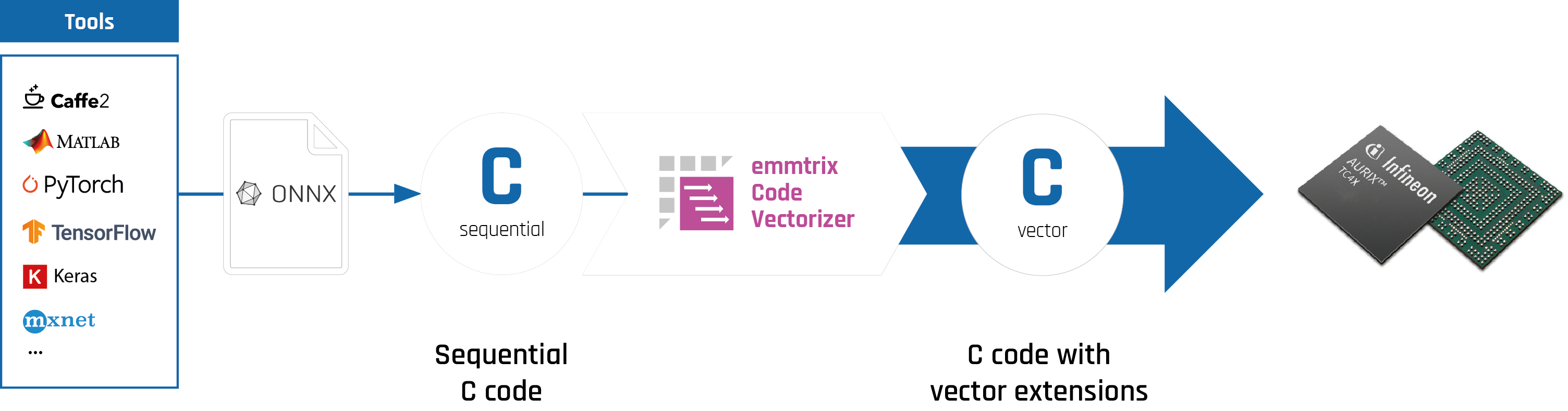

Figure 1: Automating the Conversion to C Code with Vector Extensions for Enhanced Performance

emmtrix provides a user-guided, automated vectorization flow that supports the same input languages as the general parallelization flow. Vectorization, like parallelization, is an automated, interactive process: emmtrix Parallel Studio performs an initial, automated vectorization for the selected target architecture. The generated code can be run on the target system, e.g. to verify functionality and timing of the implementation. In addition, emmtrix Parallel Studio provides an interface to connect a cycle approximate or cycle accurate simulator for the respective target platform, which returns information on the runtime behaviour of the vectorized code. This simplifies work in early project stages or continuous integration, where target hardware might not be readily available. This data is presented to the user as feedback for the success of the vectorization. The user can, if necessary, trigger transformations to improve the result. This cycle is repeated until the developer is satisfied with the results of the vectorization. The code changes are automatically applied to the code by ePS. The developer does not write any vectorized code in the process.

Code transformations that the developer can apply include typical loop transformations like fission, fusion, splitting and unrolling, but also memory layout optimizations like padding.

To perform automatic vectorization, a lot of information about the target hardware is required. This includes the number of operations executable in parallel, the width of the vector register, the supported data types and instructions, including their latency and throughput, as well as information on memory hierarchy including bandwidth and latencies. All this information is captured in a comprehensive hardware model. It also defines the syntax of the generated code, which is highly dependent on the hardware and software environment of the target. This could for example be (inline) assembly, intrinsic functions translated to machine code by the compiler or an extension to C allowing to specify functionality and memory allocation in a more developer-friendly manner.

In the following we will demonstrate the workflow with a simple, but not trivial example, a multiplication of two 15×15 matrices: the algorithm itself is simple, while the vectorization requires some code transformations to yield good performance.

Figure 2: Vectorization Example

For the calculation of each individual matrix element, the scalar product of the corresponding row vector of matrix A with the column vector of matrix B must be calculated. In a parallel implementation of this scheme, up to 16 elements of the row vector are multiplied with 16 elements of the column vector element by element in parallel and the products are summed up. Before this, however, the vectors must be loaded into the vector registers. The row vector of matrix A lies linearly in memory, so that its elements can be loaded with a single operation. However, the column vector of matrix B is scattered throughout the memory of matrix B. Therefore, 16 individual memory accesses are required to gather all the elements, which nullifies any acceleration that could be achieved through vectorization.

This can be remedied by applying a transpose operation to matrix B, which exchanges rows and columns of the matrix. The result is a memory layout that has the column vectors stored in linear memory, so that they can be read with one memory access. Finally, a transformation is applied to unroll the inner loop by the number of parallel operations supported by the vector accelerator. This in turn allows an automatic, efficient mapping of these computation to instructions of the accelerator, which yields a significant speed-up compared to sequential execution.

On the Infineon 32-bit TriCore™ AURIX™ TC4x MCU, the vectorized implementation for the 15×15 matrix multiplication generated by emmtrix Parallel Studio yields a speedup of 17,5x.

AI Workflow: Vectorization of ML Models

Figure 4: Optimize AI with emmtrix: Vectorize ML Models for Hardware Acceleration

The vectorization flow is particularly useful for AI applications. Machine learning (ML) models are often implemented as matrix operations, which are well-suited for vectorization. The emmtrix vectorization flow allows to accelerate these operations on the target hardware. You can implement your ML model in your favorite framework like TensorFlow, Caffe2, MATLAB, PyTorch etc. and export it to the ONNX format. The ONNX model is then converted into sequential C code which is used as input for the emmtrix Code Vectorizer. The resulting vectorized C code can be either simulated or run on the target hardware to get feedback on the performance and try out different vectorization strategies. Finally, the resulting code can be integrated into your application.

Features

- Functional testing of vector code independent of target platform

- Code transformations improving data level parallelism and optimizing code for vectorization

- Integration of target platform simulators for performance estimation

- Vectorization-aware code generation from Simulink® models

- Code Fusion: block-crossing vectorization of Simulink® models

- Generation of C code with vector extensions using generic libraries or target specific intrinsics

Your Benefits

- Easy exploitation of parallel vector hardware

- No need to write vectorized code manually

- Limited hardware knowledge required

- Reduced testing effort

- Functional testing without hardware

- Short development cycles

Supported Platforms

The tool supports vectorization for the next-generation of Infineon 32-bit TriCore™ AURIX™ TC4x MCUs, MPUs featuring ARM Cortex A cores with Neon or Scalable Vector Extension (SVE) instruction sets, x86 processors with the Advanced Vector Extensions (AVX) instruction set, as well as microprocessors implementing the RISC-V “V” Vector Extension (RVV).

Our generic solution allows us to support additional architecture with ease. Please contact us if required.

AURIX™

(TC4x PPU)

Supported Compilers

There is no standard on how vector instructions are programmed, making it crucial for any vectorization tool to be adaptable. The emmtrix Code Vectorizer can be easily adapted to work with any C programming model, providing flexibility across a range of platforms and compilers.

There are three main vector programming models in the C language:

- Inline Assembly: This approach involves using inline assembly within the C code, offering fine control over hardware but requiring deep knowledge of the processor’s architecture.

- Platform-Specific Intrinsics (__builtin functions): These are special functions provided by the compiler to leverage specific hardware features. They provide a more abstracted way to access vector units compared to inline assembly, but still require knowledge of platform-specific details.

- Compiler-Specific Vector C Extension + Platform-Specific Intrinsics: This approach uses compiler-specific extensions to the C language, such as vector data types and overloaded operators, for easier programming, portability, and readability of the vectorized code. However, since the vector C extensions only cover the fundamental features of the vector units, they are combined with platform-specific intrinsics to achieve a balance between portability and performance optimization.

The emmtrix Code Vectorizer supports all three programming models and typically uses the most abstract model to provide easy readability of the generated code.

Visit our emmtrix wiki for detailed technical information on platforms and compilers, complementing the tools available on our official website.

For more information on emmtrix Code Vectorizer or to request a demo, a chat or a meeting use our contact form or get directly in touch. We’re looking forward to hearing from you.

Rainer Heim