Inline Transformation

Inline transformation, also known as function inlining or inline expansion, is a compiler optimization that replaces a function call with the actual body of the called function. Instead of executing a separate call instruction and incurring the overhead of passing arguments and returning a result, the compiler inserts the function’s code directly at each call site. This is conceptually similar to a preprocessor macro expansion, but it is performed by the compiler on the intermediate code without altering the original source text. The primary goal of inlining is to improve performance by eliminating function-call overhead and enabling further optimizations. Modern compilers can automatically inline functions they deem profitable, and languages like C/C++ provide an inline keyword to suggest inlining (though the compiler is free to ignore it). Inline expansion can occur at compile time or even later – for instance, link-time optimization (LTO) allows inlining across object files, and Just-In-Time (JIT) runtimes (like the Java HotSpot VM) perform inlining at runtime using profiling information.

Mechanism of Inline Transformation

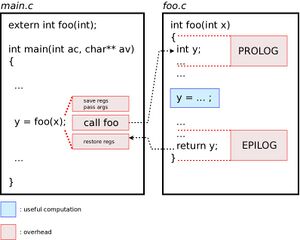

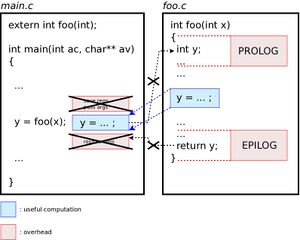

When a function call is inlined, the compiler treats the function much like a code snippet to be substituted into the caller. It will evaluate and assign the function’s arguments to local temporaries (as it would for a normal call), then insert the entire function body at the call site, adjusting variable references and control flow as needed. This means that the normal process of jumping to the function’s code and then returning is bypassed. Ordinarily, a function call requires a branch (transfer of control) to the function, plus setup and teardown instructions (such as saving registers, pushing arguments, and later restoring registers on return) – with inlining, these steps are eliminated so that execution “drops through” directly into the inlined code without a call or return instruction.

Because the function body is now part of the caller, the compiler can optimize across what was once a call boundary. For example, if certain arguments are constants at the call site, those constant values may propagate into the inlined function, allowing the compiler to simplify calculations or remove branches inside the function [2]. In effect, inlining can make two separate functions behave as one larger function, which often enables additional compiler optimizations that would not be possible otherwise.

Advantages and Disadvantages

Advantages:

- Eliminating call overhead: Inlining avoids the runtime cost of pushing function arguments, jumping to the function, and returning from it. This can make execution faster, especially for very small or frequently called functions where the call overhead is a significant portion of the runtime cost.

- Enabling further optimizations: By merging the function code into the caller, the compiler gains a wider scope for optimization. It can perform constant propagation, common subexpression elimination, loop optimizations, and other transformations across what used to be a function boundary [2]. For instance, inlined code might expose that a condition is always true in a particular context, allowing dead code elimination in the combined code.

- Potentially improved performance and smaller code for tiny functions: If a function is very simple (e.g., just returns a calculation or a field) and is called often, inlining it might not only speed up execution but could reduce code size by removing the call/return sequence. (In some cases, the overhead of a call is larger than the function body itself.) Inlining such small functions can both save time and avoid duplicate function call setup code, yielding faster and sometimes smaller binaries [2].

- Better use of instruction pipeline: Eliminating function calls can help the CPU’s instruction pipeline and branch predictor. With no branch to an external function, there’s no risk of misprediction or pipeline flush for that call, which can improve the instruction flow continuity (though this benefit is context-dependent).

Disadvantages:

- Code size increase (code bloat): The biggest drawback of inlining is that it duplicates the function’s code at every call site. If a function is inlined in N places, there will be N copies of its body in the final program. This can dramatically increase the compiled code size, especially for larger functions or many call sites. A larger binary can negatively impact instruction cache usage and paging.

- Instruction cache pressure: Excessive inlining can hurt performance by filling up the CPU’s instruction cache with repeated code. When code size grows too large, it may no longer fit in fast instruction caches, leading to more cache misses and slower execution. In other words, beyond a certain point, the lost I-cache efficiency outweighs the saved function call overhead. As a rule of thumb, some inlining improves speed at a minor cost in space, but too much inlining can reduce speed due to cache effects and increased binary size.

- Diminishing returns for large functions: Inlining a large function can embed a lot of code into callers, which might not be worth the small constant overhead of a function call. Compilers often refuse to inline functions that are “too large” because the benefit doesn’t justify the cost. In fact, most compilers ignore an

inlinerequest if the function’s size exceeds certain heuristics. Inlining deeply recursive functions is also usually avoided (or limited to a few unrolls) because it would blow up code size or even be impossible to fully inline infinite recursion. - Compilation time and memory: While not usually a major concern, inlining can increase compile time and memory usage in the compiler, since the optimizer now has to work with larger functions after inlining. Extremely aggressive inlining (especially via compiler flags) might slow down compilation and produce larger intermediate code for the compiler to process.

- Debugging and profiling complexity: When a function is inlined, it no longer exists as a separate entity in the compiled output, which can complicate debugging. For example, setting breakpoints or getting stack traces for inlined functions is harder because they don’t have their own stack frame. Similarly, performance profilers might attribute time spent in an inlined function to the caller, which can be confusing. (Modern debuggers and profilers do have support for inlined code, but it can still be less straightforward than with regular function calls.)

In general, inlining is most beneficial for small, frequently-called functions (such as simple getters or arithmetic functions) and in performance-critical code paths. It is usually counterproductive for large or infrequently-called functions. Compilers use sophisticated heuristics to decide an optimal balance (discussed below), and they may ignore a programmer’s inline suggestion if it would lead to worse results.

Implementation in Popular Compilers

Modern compilers provide various ways to control or hint at inline transformation, including language keywords, special attributes, and optimization flags. Notably, the decision to inline is ultimately made by the compiler’s optimizer, which considers factors such as function size, complexity, and the chosen optimization level. Depending on the optimization settings (e.g., -O0 vs. -O3), inlining behavior can vary significantly. Below is how inline expansion is handled in a few popular C/C++ compilers:

Heuristics for Inlining

Compilers use heuristics to decide when inlining is beneficial. These heuristics balance performance improvements against potential code bloat. Factors considered include:

- Function size: Small functions are more likely to be inlined, while large functions may be rejected due to code growth concerns.

- Call frequency: Frequently called functions are strong candidates for inlining to reduce call overhead.

- Control flow complexity: Functions with loops, branches, or recursion are less likely to be inlined unless explicitly forced.

- Compiler optimization level: Higher optimization levels (e.g.,

-O3) increase the aggressiveness of inlining, while-O0typically disables it. - Interprocedural analysis: Some compilers perform whole-program analysis (e.g., GCC with LTO, MSVC with LTCG, Intel ICC/ICX) to determine profitable inlining across translation units.

Each compiler has its own implementation of these heuristics, which evolve over time to balance performance and maintainability.

Always Inline Attribute

Compilers offer mechanisms to enforce inlining of functions even when regular inlining is disabled (e.g. by -O0).

- GCC & Clang/LLVM: Both compilers support

__attribute__((always_inline)), ensuring that a function is inlined even when optimizations are disabled. If inlining is not possible (e.g., due to recursion or taking the function’s address), an error or warning is emitted.[3] - MSVC: Offers the

__forceinlinekeyword, which strongly suggests inlining but does not guarantee it. If inlining is not feasible, the function remains out-of-line, and the compiler may issue a warning.

No Inline

In some cases, it is beneficial to prevent a function from being inlined, such as when debugging, reducing code bloat, or avoiding unintended performance regressions. Most compilers offer explicit mechanisms to disable inlining:

- GCC & Clang: Both support

__attribute__((noinline)), which ensures that a function is never inlined, regardless of optimization settings. Additionally, the compiler flag-fno-inlinecan be used to disable inlining globally. - MSVC: Provides the

__declspec(noinline)attribute to prevent function inlining. The compiler option/Ob0disables all inlining, even for functions marked asinline.

Clang/LLVM Inline Implementation

LLVM uses a cost-based heuristic to decide whether a function should be inlined at a given call site. This decision is primarily handled by the InlineCost analysis, located in the files InlineCost.cpp and InlineCost.h. The inline parameters that influence this decision are encapsulated in the InlineParams structure.

The InlineParams structure defines the thresholds and conditions that govern LLVM’s inlining decisions. These values vary based on the optimization level (-O0 to -O3).

| Parameter | Description | Default Values |

|---|---|---|

| DefaultThreshold | Standard threshold for inlining decisions. If the computed inline cost is below this value, the function is likely to be inlined. | 0 (-O0), 75 (-O1), 225 (-O2), 275 (-O3) |

| HintThreshold | Threshold for functions marked as inline, influencing their likelihood of being inlined. | Higher than DefaultThreshold |

| ColdThreshold | Lower threshold for cold functions that are rarely executed. | Lower than DefaultThreshold |

| OptSizeThreshold | Threshold when optimizing for size (-Os). | Lower than DefaultThreshold |

| OptMinSizeThreshold | Threshold when optimizing for minimal size (-Oz). | Lower than OptSizeThreshold |

| HotCallSiteThreshold | Increased threshold for call sites that are frequently executed (hot paths). | Higher than DefaultThreshold |

| LocallyHotCallSiteThreshold | Threshold for call sites considered locally hot relative to their function entry. | Similar to HotCallSiteThreshold |

| ColdCallSiteThreshold | Threshold for cold call sites, discouraging inlining. | Lower than DefaultThreshold |

| ComputeFullInlineCost | Determines whether the full inline cost should always be computed. | true |

| EnableDeferral | Allows the inlining decision to be deferred for later analysis. | true |

| AllowRecursiveCall | Specifies whether recursive functions are allowed to be inlined. | false (-O0 to -O2), true (-O3) |

One difference to mention is that Clang’s diagnostics and reports can help understand inlining decisions. For example, Clang has flags like -Rpass=inline and -Rpass-missed=inline which, at compile time, can report which functions were inlined or not inlined and why. This can be useful to tune code for inlining with Clang. The heuristics themselves (function size thresholds, etc.) are continuously refined in LLVM’s development, but generally align with the goal of balancing performance gain against code growth.

Challenges and Limitations

Deciding when to inline a function is a complex problem, and compilers use sophisticated heuristics to make this decision. Inlining provides a trade-off between speed and size, and the “right” amount of inlining can depend on the target CPU, the overall program structure, and runtime behavior of the code. Some of the challenges and considerations include:

- Predicting performance impact is non-trivial: While removing a function call generally improves execution speed, the net effect on a large program is not always positive. Inlining can increase or decrease performance depending on many factors. For example, inlining might speed up one part of the code but cause another part to slow down due to cache misses. The compiler has to predict whether inlining a particular function at a particular call site will be beneficial overall, which is undecidable with perfect accuracy. As studies and experience have shown, no compiler can always make the optimal inlining decision because it lacks full knowledge of runtime execution patterns and hardware microarchitectural effects [4]. The instruction cache behavior is especially critical: a program that fit in cache before might overflow it after inlining one too many functions, causing performance to drop. These complex interactions mean that inlining decisions are essentially heuristic guesses aimed at a balance.

- Compiler heuristics: Modern compilers treat the inlining decision as an optimization problem. They often set a “budget” for code growth and try to inline the most beneficial calls without exceeding that budget. This is sometimes modeled like a knapsack problem – choosing which function calls to inline to maximize estimated performance gain for a given allowable increase in code size. The heuristics involve metrics such as the size of the function (in internal intermediate representation instructions), the number of call sites, and the estimated frequency of each call. For instance, a call inside a loop that runs thousands of times is more profitable to inline than a call in a one-off initialization function. Compilers also consider whether inlining a function will enable subsequent optimizations: if inlining a function exposes a constant or a branch that can simplify the code, the compiler gives that more weight. These factors are combined into a cost/benefit analysis for each call site. If the estimated benefit (e.g., saved cycles) outweighs the cost (e.g., added instructions and bytes of code), the call is inlined – otherwise it’s left as a regular call [4]. Different compilers (and even different versions of the same compiler) use different formulas and thresholds for this. For example, GCC and Clang assign a certain “cost” to the function based on IR instruction count and adjust it if the function is marked

inline(which gives a hint benefit) or if the call is in a hot path, etc. MSVC similarly has internal thresholds and will inline more aggressively at/Ob2than at/Ob1. These heuristics are continually refined to produce good results across typical programs. - Profile-guided inlining: One way to improve inlining decisions is to use profile-guided optimization (PGO). PGO involves compiling the program, running it on sample workloads to gather actual execution frequencies of functions and branches, and then feeding that profile data back into a second compilation. With PGO, the compiler knows which functions are actually hot (called frequently in practice) and which call sites are executed often. This information can greatly inform the inlining heuristics – for example, the compiler might inline a function it knows is called millions of times a second, but not inline another function that is rarely used, even if they are similar in size. Using PGO, compilers can be more bold about inlining hot paths and avoid code bloat on cold paths. That said, the gains from PGO-based inlining, while real, are often in the single-digit percentages of performance [4]. It helps the compiler make more informed decisions, but it doesn’t fundamentally eliminate the trade-offs. In some cases PGO might even cause slight regressions if the profile data misleads the heuristics (e.g., if the runtime usage differs from the training run). Still, PGO is a valuable tool for squeezing out extra performance by fine-tuning inlining and other optimizations based on actual usage.

- Limitations and overrides: There are practical limits to inlining. Compilers will not inline a function in certain scenarios, no matter what: for example, a recursive function usually can’t be fully inlined (it would lead to infinite code expansion), although some compilers will unroll a recursion a fixed number of times if marked inline. If a function’s address is taken (meaning a pointer to the function is used), most compilers have to generate an actual function body for it, and they might not inline all calls either because the function now needs to exist independently [5]. Virtual function calls in C++ cannot be inlined unless the compiler can deduce the exact target (e.g., the object’s dynamic type is known or the function is devirtualized); thus, inlining across a polymorphic call often requires whole-program analysis or final devirtualization. Additionally, as mentioned earlier, compilers impose certain limits to avoid pathological code expansion: GCC, for instance, has parameters like

inline-unit-growthandmax-inline-insns-singlethat prevent inlining from blowing up the code more than a certain factor [6] [6]. These ensure that even under-O3, the compiler won’t inline everything blindly and will stop if the function grows too large due to inlining. - Link-time optimization (LTO): Traditional compilation limits inlining to within a single source file (translation unit) because the compiler can only see one .c/.cpp file at a time. Link-time optimization lifts this restriction by allowing inlining (and other optimizations) to occur across translation unit boundaries at link time. With LTO enabled (for example,

gcc -fltoor MSVC’s/LTCG), the compiler effectively sees the entire program or library, so it can inline functions from one module into another. This means even if you didn’t mark a functioninlineor put it in a header, LTO might inline it if it’s beneficial. For instance, a small utility function defined in one source file and called in another could be inlined during LTO, whereas without LTO that call would remain a regular function call (because the compiler wouldn’t have seen the function’s body while compiling the caller). LTO thus increases the scope of inlining and can yield significant performance improvements for codebases split across many files. One common use of LTO-driven inlining is for library functions: the compiler might inline standard library functions or other library calls if LTO is enabled and it has the library’s code. The downside is that LTO can make compile times (or link times) longer and increase memory usage during compilation, due to the larger optimization scope. Also, the same caution applies: even with whole-program visibility, the compiler still uses heuristics to decide what to inline [4]. Having more opportunities to inline (thanks to LTO) doesn’t mean it will inline everything; it still must choose carefully to avoid overwhelming code bloat or slower performance from cache misses. - Alternate strategies: In cases where inlining is not beneficial or possible, other optimizations may be preferable. For example, compilers might use outline strategies (the opposite of inline) to reduce code size – i.e., they might decide not to inline to keep code small (especially at

-Osor in constrained environments). Another strategy is partial inlining, where the compiler might extract and inline only a portion of a function. GCC introduced something along these lines (sometimes called “IPA-split” or partial inlining) where it tries to inline the hot parts of a function into callers and keep the cold parts out-of-line, as a compromise. This is advanced and not directly under user control, but it shows that inlining doesn’t have to be all-or-nothing.

In summary, inline transformation is a powerful optimization, but it must be applied with care. Compilers provide keywords and options to guide inlining, but they also rightfully employ their own models to decide when inlining makes sense. As a developer, a good practice is to trust the compiler for general decisions, and only force inlining in cases where you have clear evidence (via profiling or knowledge of the code) that the compiler’s heuristic might be missing an opportunity. Tools like optimization reports or profile-guided optimization can assist in making those decisions. Ultimately, inline transformation is one of many tools in the compiler’s toolbox, and its effectiveness will vary – some code speeds up dramatically with inlining, while in other cases excessive inlining can degrade performance. The key is balancing those effects, a task that modern compilers handle through continual refinement of their inlining algorithms.

Inline Transformation in emmtrix Studio

emmtrix Studio can implement inlining using #pragma directives or via the GUI. Inline is a transformation that inlines function bodies. It replaces function calls with their implementation.

Typical Usage and Benefits

The transformation is used to reduce the overhead caused by function calls and to increase the potential for optimizations. The latter is expressed through reduced parallelization difficulties and higher possibility for other transformations to be applied.

Example

In the following code, all functions calls within main are inlined.#include <stdio.h>

void func() {

printf("Hello World \n");

}

#pragma EMX_TRANSFORMATION Inline

int main() {

func();

return 0;

}

|

The following code is the generated code after the transformation has been applied.#include <stdio.h>

int main(void) {

printf("Hello World \n");

return 0;

}

|

Parameters

Following parameters can be set (each description is followed by keyword in pragma-syntax and default value):

| Id | Default Value | Description |

|---|---|---|

remove_unused

|

true | Remove unused inlined functions - Any inlined function that is not unused after inlining is removed. |

External Links

- Inline expansion - Wikipedia

References

- ↑ 1.0 1.1 C Programming Techniques: Function Call Inlining - Fabien Le Mentec https://www.embeddedrelated.com/showarticle/172.php

- ↑ 2.0 2.1 2.2 Inline - Using the GNU Compiler Collection (GCC) https://gcc.gnu.org/onlinedocs/gcc-4.1.2/gcc/Inline.html

- ↑ Function Attributes - Using the GNU Compiler Collection (GCC) https://gcc.gnu.org/onlinedocs/gcc-4.6.4/gcc/Function-Attributes.html

- ↑ 4.0 4.1 4.2 4.3 c++ - Link-time optimization and inline - Stack Overflow https://stackoverflow.com/questions/7046547/link-time-optimization-and-inline

- ↑ Inline Functions (C++) | Microsoft Learn https://learn.microsoft.com/en-us/cpp/cpp/inline-functions-cpp?view=msvc-170

- ↑ 6.0 6.1 Optimize Options (Using the GNU Compiler Collection (GCC)) https://gcc.gnu.org/onlinedocs/gcc/Optimize-Options.html